Sync and BTSync Detailed User Manual

Note: This manual no longer focuses exclusively on BTSync,

and there are numerous reasons for it. We will, from now on,

focus on sync process as such and will cover all the available

sync alternatives. We will also start working on collecting the

information base for creation of an open source alternative to

BTSync.

And it is a pleasure to mention that even at this point there

exists enough information to create a truly open source BTSync

alternative, and even more than that, we already have at least

two working versions of the BTSync alternatives.

You can ask the questions, bring some proposals or simply

chat with other participants who are on line and

have BTSync running and everyone will see it within seconds.

To do that, you need to create a new BTSync folder for chat

page with the following r/w key ("secret"):

AR333HZMSCBUQ3XVO3SIAVZAXEXZTAL5Y

You can just keep this page loaded in your browser and simply refresh

it periodically and you will almost immediately get new messages.

So it becomes like a chat.

Contents

Open source sync design

Existing open source sync alternatives

Syncthing (Pulse)

Ego politics

Other open source sync alternatives

BTSync

Problems and solutions

Installation and configuration

O/S Independent issues

Linux

Principles of operation

Edit History

Contacts: Where to get help?

If you have any problems using the BTSync program, send the email to here: preciseinfo [@] mail [.] ru.

Get involved, instead of sitting on your butt

Get involved, instead of sitting on your butt, hoarding

your creative energy and waiting for some "Messiah" to come

and "save" you. If you only take, but never give anything,

you will never get enough to satisfy you finally.

Because in that case you are simply wasting your life

in endless turns of the Wheel of Karma, as they call it,

or are "spinning your wheels, getting nowhere really fast".

No matter who you are, you do have something to contribute.

For this is the Law. The Law of Life itself! All you need

to do it to look inside yourself and pay attention to that

which "turns you on", something that attracts you, something

that excites you and makes you want to be.

And then you simply give whatever "turns you on" without worrying of condemnation

and denials of others, done, primarily, out of their of blindness

and self-denial. You shalt see this one day. It is just a

matter of time WHEN you do, at least if you are sincere and

are honest enough to yourself.

If all those of you, who really understand and know something,

simply add what they know to this manual and make sure to

add the new chapter/topic to the TOC, can you imagine how

much you help all sorts of people struggling "out there"

with artificial problems that only exist because they simply

do not understand how it all works? Well, all that wasted energy

can be redirected to CREATIVE things, instead of wasting it,

struggling with the paper dragons.

So, let this manual become truly alive with every drop you

contribute to it, and, with it, YOU will. For it is the Law,

the law of Life itself as expressed by the Infinite

Multidimensional All-Pervading and Ever-Unfolding Intelligence.

The purpose of this manual

The purpose of this manual is not to merely duplicate the information

available elsewhere, including the "official" BTSync manuals.

But to provide the comprehensive and most detailed coverage

of some of the tricky issues and describe as many different

aspects of BTSync behavior and its effects as possible.

This means that not every single conceivable issue is covered here,

even though we attempt to cover as much as possible.

The second point is to hopefully give an example to other

software manufacturers or developers of how do you write documentation

which covers all the minute details of your products in such a way

that even users with the most basic understanding of computers

could use your products without going to all sorts of forums

and asking about those things that should have been explained

in the first place.

Many, if not most, software manufacturers and developers in most

cases provide only scratchy documentation that leaves way too

many questions in the minds of ordinary users. Instead, they keep

placing almost all of their efforts on developing all sorts of

bells and whistles and "features" of all kinds, as a result of

their never ending market department driven attempts to grab a

bigger and bigger "market share", and BT is about as perfect

example of it, as it gets.

So, instead of covering the most important issues in their

documentation and fixing the critical bugs, they dedicate most of

their efforts on adding more and more "bells and whistles" to their

product while urgently needing resolution issues remain unattended.

Their basic product is not fully described in clear and concise and

detailed way.

If you look at their blog, you might get amazed at the amount of

hype they keep producing in their massive marketing efforts.

http://blog.bittorrent.com/

And if you subscribe to their RSS feed, you'll be getting the

announcements on all sorts of "bundles" packaging all sorts

of zombie "entertainment" nearly every other day.

http://blog.bittorrent.com/rss/

But if you look at their BTSync forums, you'll be amazed, if

not "blown out of your chair" outright seeing the amount of

problems users experience, and plenty of those problems are of

CRITICAL nature, the issues that have to be resolved ASAP.

But many, if not most of them, simply remain unattended as

you can see by the age of some of those threads.

The result of this is the products that work in most cases, but in

some cases of "this should never happen" kind they either do not

behave correctly or simply do not work - period.

For example, we have observed a countless cases where new users

join the share but never actually start downloading it.

So, they sit there for a while and then leave, never to be seen

again. At the moment, we are seeing over half of the nodes on one of our

main shares not being in the state of 100% completion, and they

stay that way permanently, never completing, at least as it looks

to other nodes. We have also seen cases where some nodes begin

to download, but then stop for some reason and never resume

the download and never completing the initial download. And

we have seen the cases where they do manage to complete downloads

if you restart BTSync on a master share. And we have seen plenty

of cases where nodes permanently remain in a locked state as

a result of time difference issue between the nodes, which is

also one of the most critical issues that had to be resolved

immediately, but remains unresolved for nearly a year now.

Now, every single issue above is of CRITICAL level and has to be

resolved immediately. Because the program simply does not work

or works in fundamentally incorrect or totally unexpected ways.

The users install the program, try to join some share and sit

there for few minutes waiting for something

to happen. But, since nothing happens, they simply leave the share

and probably simply deinstall the BTSync thinking that "this stuff does

not work". And what could be MORE critical of an issue than not

being able to perform even the initial download? How is this even

possible technically?

They do not seem to understand that about the MOST important

criteria in any product is not necessarily the quantity of

bells and whistles, but the robust and predictable behavior

and the ability to recover from any situation, conceivable

or "impossible".

And the second most important property of any product is

precise and detailed documentation that could be understood

by "mere mortals", and not just some one-liners of a smart

kind that could be understood only by professionals.

Documentation should be as easy to read as you read a newspaper.

You should not be sitting there, scratching your head and

wasting your mental and creative energy on trying to think

"what do they mean by that?".

So, by reading a good documentation you effectively become

and expert as far as product use goes. Simple as that.

And, finally, if not most importantly, the overwhelming

volume of problem posts on their forums, for the most part,

is a result that users simply do not know or do not understand

how things should work under normal circumstances, simply

because it is not documented in the manuals. When we were

introduced to the BTSync, we could not quite believe how scarce

and sketchy is their documentation and how little of some of

the most critical issues of the program behavior were described.

Forums are not the place to provide the documentation. Else,

you see the large amount of traffic on them that should not be

there if it all described somewhere. As a result, their customer

support on the forums is simply overwhelmed with amount of questions

and issues.

The end result of poor documentation is wasting a lot of energy

that could be used for creative purposes and not merely wasting

one's time struggling with paper dragons and purely imaginable

issues that shouldn't be there to begin with.

Building the sophisticated information distribution systems

You can build the sophisticated information distribution systems

of a synthetic kind where one part of your system exhibits the

private network behavior and is not visible to others, who are

not the part of your private network, and the other part of your

system exhibits the purely public aspect and can be accessed by

anyone as long as they have a key to some share/top folder/collection.

Here is one example related to "hot" and "not so hot" information

distribution via web sites:

In this example we will use the combination of BTSync and Syncthing (Pulse)

working together.

Assume you have a web site on a server somewhere and you have a working

system, possibly at home. You might be doing all sorts of updates and

edits on all sorts of information in all sorts of subdirectories of

your site which you would like to sync to the site as soon as you push

the save button in your editor. This way, you do not have to worry

about remembering which exact subdirectory of your site the information

belongs to and you don't have to waste time on using the FTP client

to update the server, which requires a number of steps before you

can actually transfer the files.

So, you can use the BTSync via privately known key to update the

site automatically and in "hot mode", meaning that as soon as you

push the save button in your editor, the information gets shipped

to the server within seconds, and without any further action on

your part beyond pushing the save button in your editor. The rest

of the process is fully automatic.

But then, you would also like to make this site available on the

sync basis to the general public so that they could receive the

"latest and greatest" updates nearly instantly. Except there is

one "but". In case you are working with some document in active

mode, which might imply a lot of updates and save cycles, possibly

every few seconds, you would not necessarily want to update the

sync clients as often as you update your site.

So, what you do is to create another folder on your disk which

contains the same exact copy of the site, but in "not so hot"

mode, so that you could decide at some point: ok, we are kind of

done with this cycle of updating this document and now it is ready

to be synced by the whole world.

To do that, you establish another connection to that 2nd folder,

but with using the Syncthing this time. What it does is to feed

your 2nd folder from the "hot" site back to your home box.

Finally, you feed that 2nd copy of your site to the whole world

via BTSync, only on a different share/folder. So, as soon as

your site on the web server is updated, it feeds the info back

to the 2nd folder and is served to the whole world via BTSync.

So, to make that 2nd folder to be not so "hot", all you need

to do is to stop the Syncthing that feeds back the information

from the site to your 2nd folder. Then, once you feel this edit

session is complete enough, you enable back that Syncthing

channel and it will bring back the site to the 2nd folder that

is serving the world now.

One other benefit you receive with this approach is that

you also assure a certain guarantee that your original information

does not get damaged because of the actions of other users from

the general public that might do some crazy things with their copy, which, in turn,

might update all the other nodes with wrong or bad information.

This is quite possible with BTSync and we have seen it many

times in different situations, either because of program

misbehaving or because some users do things not really understanding

what they are doing. And we have also seen plenty of cases when

some users intentionally try to attack the share. In this case,

under no circumstances would you like your original information

to get damaged. That is why you establish a private channel only

between your working system and your server. In this case, you

know 100% that you do on that link only those things that will

not cause the damage to the original information.

So, the private side of your system can be controlled by you

in a predictable and reliable manner, because you control and

have access to both ends of that link, but the 2nd folder, which is connected

to the general public, might and is likely to get damaged, sooner

or later, because of all sorts of reasons. Moreover, some degree

of damage may be observed on more or less periodic basis. We

have seen some shares getting damaged every few days, mostly

because not that many people really understand what they are trying

to do and the consequences of it.

But you can recover from that damage since you also control the 2nd

folder and the original state of the data can be restored within seconds

simply by copying the original data over the damaged copy. This

can be done with a single command. You just need to remember to

copy the entire directory tree preserving the file modification times.

So, what you have effectively, is a synthetic system that exhibits

the behavior of totally private connections, but, at the same time, any part

of your information may become public any time you want and all

of it will be updated automatically without any need for you

to do any additional things in order for the entire system to

function as a whole, and in any mode of operation you might think of,

"hot", "not so hot", or even in "slow motion".

Interactive information systems with nearly instant delivery

What the above mentioned synthetic information system also means

is that you can get really creative and can concentrate primarily

on the creative aspects of working with information, instead of

being bogged down by purely technical aspects of information

management and the routine work. This way, your creative energy

could be used at its peak, without worry about all sorts of

routine technical things and tasks.

Once the system is set up, you no longer have to worry about

many things that assure the whole thing works. Everything,

but the creative aspect itself, becomes fully automatic and

nearly instant delivery and distribution of information becomes

guaranteed and fully automated.

In other words, now has come the time, finally, "to get down with it"

and investigate and expose the REAL issues facing this planet,

as you no longer have any excuses of not "doing a good job"

because of some bizarre or overwhelming technical aspects and

issues.

What else can we say?

What does sync have to do with New World Order?

Note: the following chapter(s) were originally written in the context

of conversion of Syncthing to Pulse and, shortly after that,

change of copyright license, which has the profound effects on the

entire project. So, the implied reference to be kept in mind is

the Syncthing/Pulse project.

Well, in a single word: EVERYTHING! Believe it or not.

In relationship to Amazon.com showing up in the whois request,

whatever that means or implies, one can mention this kind of thing:

If, by any chance, Amazon.com is in fact involved in any of this,

then the question arises: But who stands BEHIND the Amazon curtain?

For example, it is alleged that behind the BTSync stands

no one less than the NSA, and that is pure grade evil and global,

all pervasive surveillance and identification of the "undesirables"

to be placed on the so called "red list" of those individuals

that are considered "dangerous" in the view of the powers of evil

and this NWO thing.

Interestingly enough, even the veterans returning home from the

war zones are considered to be "the most dangerous" kind, if you

can imagine such a degree of evil. Those that are promoted as

"heroes" and "fighters for freedom and democracy", in reality,

are in fact considered to be the "most dangerous" of all. And

those soldiers do not even realize any of this until they

return back home and cold and brutal reality hits them between

their eyes.

Most people childishly believe that there simply must be some

limits to evil and it can not go against the most basic things.

Well, sooner or later, they'll have a chance to realize that

the evil has no limits whatsoever, ethical, moral or otherwise".

That is its very nature. It does not even have a concept of

some bounds or limits, just as shown, for example, in a book

called "Atlas Shrugged" by Ayn Rand, who was one of the

mistresses of Rothschild and this book, according to John Todd,

a former member of "the council of 13", was created as per

request of Rothschild as indoctrination book for the Illuminati.

Kind of mind programming book in a psy-ops kind of scenario.

John Todd's introduction to Atlas Shrugged

(about the famous Illuminati mind conditioning book by Ayn Rand)

https://antimatrix.org/Convert/Books/Ayn_Rand/Ayn_Rand_Atlas_Shrugged.html#John_Todd_introduction_to_Atlas_Shrugged

And that opens the whole Pandora box that leads to this thing

called the NWO. Actually, anything one deals with in the modern

world, eventually and inevitably leads to the same thing, and that

is takeover of this planet via "The Plan" of planetary takeover,

which has been nearly completed in full at this junction.

And so let us diverge a little bit from the "main subject",

and attempt to cover just a few basic things about that scheme, because,

first of all, these are some of the most vitally important things

that are happening on this planet, by far.

Anything else simply pales in comparison, in terms of

significance or the impact, at least from the standpoint

of the global information war raging on as we speak, and if one

underestimates the consequences of that, then, as they say

"you are as good as dead".

And about the sorriest thing is that not that many people even realize

what is REALLY going on. They just buried their heads in the sand and

"play dumb", like it is "not my problem". So...

For example, on BTSync forums there was one thread that specifically

addressed the issues related to the NSA, and it was one of the most

active threads. Interestingly enough, the BTSync staff has not made any

public statements on it or about it in order to clarify some issues.

Then, all of a sudden, that thread was simply deleted, without saying

a word of comment or providing a reason for it! Why would that happen

and what would it imply?

Furthermore, one participant on that thread has said that he definitely

has seen some "strange behavior" of some things, except he did not

wish to disclose exactly what was it, and for quite obvious reasons,

once you realize what you are dealing with.

Are claims of security and encryption real?

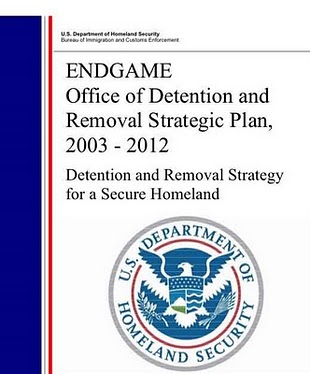

And the idea behind the so called "red" and "yellow" lists is that all

those that end up on the "red list" are to be exterminated first,

or thrown into the concentration camps according to the NWO plans.

In the USA alone, at this junction, there exist over 100 concentration

camps, with gassing chambers, efficient furnaces to burn the bodies

and the rest of it. The "red list" as of couple of years ago contained

about a MILLION people, if you can comprehend the grandness of the scale

of this operation code named "Project ENDGAME". Barak Obama has issued

a secret order to activate that operation back in 2009.

This information is publicly available, from Alex Jones, for example.

"The bottom line" is that one can not forget that we live in the world

of evil most profound, and all the so called governments throughout the

world and even the international organizations, such as UN, EU and so

on, are merely the puppet theaters, stuffed with puppets that sold their

souls for the benefits of stuffing their own bellies at the expense

of all others. For example, Obama bluntly, and even jokingly states:

"there is a theatrical aspect to politics" and governing. One can only

add that it is all MOSTLY theatrical. The reality aspects of it are

minuscule.

Look for FEMA concentration camps. Btw, hundreds of thousands of caskets,

made out of special plastic and capable of storing at least 3 bodies in

each one are stored all over the USA. Over 30 THOUSAND guillotines

are stored in two states in the US alone. With that many guillotines

you can "process" people at the rate of chickens on a chicken factory.

So, we are talking about MASS murders of genocide scale, unseen in the

entire history, done in the name of "protecting freedom and democracy"

and "fighting terrorism". Can you imagine millions of "terrorists" or

"extremists" in the USA alone?

So, the same exact people that were called the "dissidents" before,

are now classified as "the enemies of people", the "extremists"

and even the "terrorists", all of which is pure grade lies and fabrications,

just as was explained by Nicholas Rockefeller to Aaron Russo in their friendly meetings.

(Note: you can find the interview with Aaaron Russo by doing

a search on "Aaron Russo: Reflections and Warnings - Full Transcript".

In this profoundly shocking interview he talks about his friendly

meetings with Nicholas Rockefeller, who, for example, explained

to him the scam of 911 and how it was meant to create a totalitarian

state in the USA. He also talks about the mechanisms used by "the

banking mafia" to rob the world and create mountains of fake "money",

not backed by anything other than thin air.)

This is not just a bad joke, by ANY means. Except you need to study these

subjects to even BEGIN to comprehend what might be taking place with

this seemingly strange "conversion" of Syncthing into Pulse brand

and all the alleged "support" by the corporate "sponsors".

The problem for the NWO "elite" is true information that discloses

their evils and exposes their scheme of world takeover.

That is why it is being suppressed on a global scale and the so called governments,

who, in reality, are nothing more than mere puppets of the NWO puppet masters,

have already passed all sorts of "laws" to suppress the true information by

classifying it as either outright "terrorism" or "extremism".

Interestingly enough, most of those "laws" and "executive orders"

go against The Universal Declaration of Human Rights in the most direct

and blatant ways.

We are in the global information war right now and of the magnitude

never seen by the mankind in its entire history.

And that is precisely where sync technology comes into this picture.

Sync on P2P basis is the most urgent need in today's Information Technology

It is very unfortunate, and even sad, that even the "advanced crowd",

the developers, do not seem to be "getting it". They seem to look

entirely oblivious of the facts and events in the world that have

the most profound significance and the impact on the present and

future of the entire planet. They watch the puppet theater on TV

and think that this is something that represents or corresponds

to reality. Because via mass media their minds have been zombified

to believe so. And, since their minds have been programmed with

the ideas of "I don't care, this is not MY problem", they believe

that "their world" extends to the surface of their skin, nothing

more than that.

It seems that "the smartest of all", at least as they think of themselves,

the developers, are either "playing dumb" and pretend that the issues

of public distribution of information on P2P basis do not have any merits

and "use cases", or have simply burred their heads in the sand, pretending

that it is all nothing more than "none of my business",

"let it all go to hell for as long as I can save my own skin and stuff my

own belly" kind of thing.

It is like the only things that everyone is interested in is to

sync their laptop to their camera, their server and a chip in

their shoe, if not in their brain. That is ALL they seem to "care about".

Yes, all this sync stuff is good and all that, and it does serve some

local, individual purpose. So, now you can push a single button

and you get all your devices automatically updated with the latest

collection of the zombie images, sound and video files and you name it.

Push one more button, and it all gets delivered directly to your ears

and eyes, so that you could get zombified faster with all sorts of

images of the most horrendous violence, wars, killer "heroes",

conmen of all kinds and the rest of the zombification menu of the

NWO grand "plan", of which very few even know about, or even care or

suspect that such a thing actually exists, and even if it does, "hey,

what does it have to do with me and 'my interests'? It is not MY 'problem'"!.

Then WHOSE "problem" is it? Do ANY of you know, by any humble chance?

And so, it is not entirely incomprehensible that the "developer-in-chief"

even gets vicious in his utter denials of the very need for public

information distribution issues, which is probably the most significant

development this planet is going through at this junction, as far as

purely technical issues are concerned.

Because how can you defeat the evil if you have no clue of what it

is, how does it work, what are its tools and methods, who stands behind what,

what are the "plants" and what kinds of schemes have been developed

and implemented for centuries?

There is an ongoing massive attack on the Internet going on as we speak,

and all the corrupt puppet governments and their agencies issue orders

to block more and more sites, and even worldwide, without any court orders

or proper legal proceedings in gross violation of the The Universal

Declaration of Human Rights, articles 10, 11, 18, 19, and 30.

http://www.un.org/en/documents/udhr/

Because the powers of evil that have already taken over this planet

for the most part and control over 80-90% of the world's gold, precious

metals, diamonds, energy supplies, banking system, media and publishing,

and have taken over the money printing business for all the major world

currencies with the help of the FRS scam, know very well, that their

demise and inevitable defeat begins with true information, disclosing

the nature and the mechanisms of evil and principles of world takeover.

They were able to suppress and destroy all sorts of most vital

information about the nature and mechanisms of evil, and instead,

via the zombification machine called mass media, were and are feeding

everyone lies, perversions, deceit and the ideology of violence and

destruction, and have programmed the human minds to be interested

only in the most idiotic, perverted, corrupt and violent things,

promoting greed as some kind of "objective necessity" and a basic

mechanism of "survival", and so they were able to do to this planet

anything they wish in pursuit of their final goal of taking over

the world via the scheme known as the NWO.

But, even despite their most vicious methods of destruction of

information and the authors and publishers, all of a sudden, it all

floated back up to the surface, seemingly out of nowhere. And that

is their most deadly problem, because it has spread out the waves

of energy of awareness throughout the planet and the people

throughout the world have begun to revolt against the entire

satanic doctrine of Luciferianism, that has been ruling this

planet for thousands of years.

But to stay well informed about methods and history of evil,

you now need the tools and methods that allow you to switch

to a different mode of operation. Instead of attempting to

access the sites and collections of information directly,

you need to be able to access it no matter what, on P2P

basis. There is simply no other way to prevent and stand against

all the tools and methods of blocking the information of all kinds

even bypassing the law itself, and unless millions upon millions

of people know exactly what they are dealing with, there is no

way they will be able to defeat the most powerful and sophisticated

system of evil that has been developed and perfected for

thousands of years.

Before you can stop the evil, or cut off the parasitic entities that

suck all your resources of all kinds like a black hole, and restore

the environment of this planet and human dignity and real

fairness in all sorts of things and activities, you need to KNOW

how that system of evil works.

You need to study it all, which used to take years until now, but now can

be done in months, if not days and some things - in minutes.

Or do you expect that some "noble knight on a white horse" will come

and "save" your sorry rear end, while you are sitting on it, doing

nothing and pretending you are merely dumb as a piece of wood, not

to insult the piece of wood?

WHO is going to come and "save" you if you are not willing even

to move a finger to save your sorry ass which is on fire, regardless

of whether you realize it or not?

For how long you, the false "kings", are going to deny the utmost

need and urgency of implementing the global public sharing of all

sorts of information via sync technology, which is the only

technology that allows you to stay current even with the immense

amounts of information via single collection and a single key?

The thing is that even torrents can not do that. Because torrents

are static. Once created, that's it. They can not be updated,

extended, modified and so on. How many times do you need to be

told all this before it clicks in your "sophisticated" minds?

Look, you "smart guys", even a FIVE year old child can comprehend it.

Why can't you? Are your brains so profoundly damaged and zombified

to the point that you can not put two and two together?

Are you going to ask "but what are the use cases for that"?

Try to "prove" that you need to even bother about two plus two.

"Who NEEDS it"? What are the "use cases" for that?

Well, what can we say but "it depends on how dumb you are", or

pretend to be! It is not inconceivable that by now even Life as such

is nothing compared to all the images of death they feed you

non stop, any place you look.

Yes, the situation on this planet has never been as pathetic

and as hopeless as it is right now, and that is precisely why

you have a chance to correct it, once and for all. You need to

come to a state of utter hopelessness and desperation before

most of you even move a finger, and even then, only for the

exclusive purpose to "save their own ass", pathetic as it is.

This is all you get from us in this transmission. Either you

wake up, or be prepared to face the consequences of your denials,

utter insensitivity towards each other, and even Life itself,

and utter absence of care within you. Simply because your minds

have been totally zombified with the ideas and images of death,

destruction, violence, parasitism, cunningness, corruption

and the rest of it.

It is up to YOU to decide which world you wish to live in.

No one can do it for you. Because it is against the Laws of

Free Will and Free Choice to interfere in your affairs, whatever

they might be, unless it has all come to its final, logical

fruition, at which point, it can no longer be allowed and

the forces beyond visible simply have to get involved directly

and in the most tangible ways conceivable.

Comparison of file synchronization software

Here's the link to Wikipedia article about different sync packages.

Comparison of file synchronization software

https://en.wikipedia.org/wiki/Comparison_of_file_synchronization_software

Open source sync alternatives

Introduction to open source sync alternatives

This is just a stub for now. This section will begin to

accumulate the information about the open source alternatives

to BTSync.

It is our opinion that BTSync, at least the way it is right now,

has no future, and for quite a few reasons:

-

The last updates from 1.3.109 to 1.4.72 and higher include the

major GUI change and the position of the management is "this is the

way it is going to be from now on, regardless of anything anyone

says or thinks". And that means a catastrophe. The "latest and greatest"

GUI is web browser based and it is a huge step back from the previous

design, which provided a reasonably detailed view on dynamics of nodes

update.

In particular, what used to be the Transfers tab was very useful

to see which file is sent/received from which node. You could see the

simultaneous transfers to/from different nodes and that gave you a

sufficiently good idea of what is going on. That tab is gone now and

you can not see the actual transfers taking place.

The Devices tab in old design would show one all the shares/folders

and all the nodes participating in those shares. So, in a single screen

you could have a pretty good overall idea of what is going on. That

screen is gone now and you have to click on things just to open up

another screen or semi-dialog, where you still would not see what you

could in previous design. And so you have to click more and more times

to see anything useful, and even there, its usefulness is questionable

and information provided is clearly insufficient.

This fact alone is a killer. If that is the way it is going to stay,

then BTSync is dead. Quite a few people have decided to go back to

previous version.

-

It is a closed source software which implies that you do not

really know what happens "under the hood" and you can not trust

a word about claims of encryption of traffic or increased privacy,

first of all because no claims of encryption or increased

security or privacy can be made in a closed source code, pretty much

by definition. Any security specialist knows that as good as 2+2=4.

-

The incredible number of bugs in BTSync and its generally poor stability

and consistency of behavior, combined with the fact that it is a closed

source code, makes it impossible for other developers to fix piles upon

piles of bugs, problems and the issues.

-

BT strategy on BTSync seems to be a desire to corner the sync

market via distribution of various "bundles" of zombie/biorobot

level of entertainment. As a result of this, and, possibly because

BT may have some contract arrangements to get "the piece of the pie"

of total volume of traffic in the "entertainment industry", it is only

"natural" for them to hard-wire the mechanisms of tracking the traffic

of any node running the BTsync. Therefore, whenever you run BTSync

you leave your footprint at BT.

-

There are reasons to believe, or at least suspect, the cooperation

of BT with NSA and other agencies engaged in global surveillance

and you can find some references about it in this manual.

-

BT is primarily interested in creating as much hype as possible,

instead of fixing bugs in the software to make it stable, reliable

and have a predictable and consistent behavior. Instead, they keep

developing all sorts of new bells and whistles to entrap more and

more unsuspecting customers or clients in order to control as much

of global sync traffic as possible. They regularly brag about the

mind boggling statistics of global volume of sync traffic, and to

have that ability, they'd have to hard-wire the mechanisms of informing

BT about any and all sync traffic that is tracked by BT servers,

trackers and relays.

In fact, you can not even turn off the ability

of being tracked even if you disable their trackers and relays.

Because the program simply downloads the list of servers automatically,

without informing the user. Even if you disable their trackers and

relays, still, for some magical reason, you can discover the other

nodes, even if you disable the DHT.

-

BT keeps bringing up new and new features and "improvements" in a

product which they themselves classify as Beta, which implies that the

product is not only becoming more stable, but just the other way around,

less and less stable. In beta stage, no development or new features

are allowed. Otherwise you will never get to the state of the final

version. Because bringing in new "features" will bring in new bugs.

But they not only keep bringing new "features", but even do some

major and quire radical redesign of the most basic mechanisms,

which is an absolute "no, no" in genuinely Beta phase of development.

-

BT is not willing to disclose the necessary information to create

the compatible products, which, by itself, is an evidence of their

desire to monopolize the sync market.

-

The project management, supervision and technical competence

of their developers are of such a low grade that it isn't even

laughable. It is simply pathetic. For experienced developers, some

of the design errors and incompetence in technical sense are simply

obvious, and would have never be allowed if level of technical

competence would be on the competent, professional level.

Just looking at one obvious example makes things pretty obvious. As it stands, BTSyncs

begins to "forget" the nodes it knew about on a particular share if

you keep the program running for several hours. But this should never

happen, simply because once the node discovers any other nodes there is

no logical way for it to "forget" them. How could you possibly forget

something you knew? This is evidenced by the fact that if you restart

BTSync it will again find those nodes that it "forgot" about before

you have restarted.

-

There are other numerous examples of poor or even

bad design in GUI, and we have mentioned a few of examples of it on BTSync

forums months ago, but no improvements can be seen even today. In other

words, they did not even move a finger or even looked at any of it,

like it is something utterly insignificant. But to the users it would

provide a much better and more complete and detailed picture on the whole thing

and some of the ideas we proposed would even help to identify numerous bugs

much quicker then it is possible now.

We could continue this list for quite a while, but what's the point?

As a result of this, it is pretty clear that one can not expect

BTSync to become a stable and reliable product, at least in the

foreseeable future.

That is why we began to accumulate the information on developing

the open source alternative, and it will start right in this manual,

which is no longer dedicated exclusively to BTSync, but, rather,

to the sync technology as such.

Highly desired features

This is just a stub for now. This section will begin to fill

with those features of sync programs that distinguish it

from the "stone age" of syncing.

First of all, it is not a bad idea to keep in mind that

syncing, as such, is still quite an immature technology.

Furthermore, it is a highly complex from the standpoint

of all sorts of logical cases or consequences of all sorts

of conditions and events, and there are so many of those,

that we can not quite enumerate them all.

Some of the most critical parameters of the sync process are

as follows:

-

Stability, consistency of data across all nodes and

predictability of behavior.

-

Detailed and precise reporting of various operations and

their results, be it warnings, errors or success results.

-

Being able to see the entire process in as precise and

as detailed way as possible.

-

Precise and detailed view on dynamics of the process,

especially during the active periods when a number of

nodes begin to upload or download the entire folders/shares/collections.

-

The ability to control the process, down to the level of

individual nodes. This includes putting some share on hold

or putting the individual node on hold, possibly only on one

particular share. This includes the extreme cases of suspending

or even banning the nodes for various reasons, such as when you

see some people that are essentially the parasites, getting as

much as they can and then splitting and not giving anything to others.

-

The ability to customize the user interface, such as adding,

removing, rearranging some columns in various dialog boxes,

resizing the GUI elements, setting fonts and colors and all

sorts of other visual or convenience things.

-

Detailed and precise and complete error and warning reporting.

Additional problems of an open source implementation

This is one of the aspects of the open source that could be

easily overlooked, which might cause the most drastic problems.

It is applicable to the situations where the information exchange

does not necessarily happen within some trusted group of people

or between different devices belonging to one individual.

And this is particularly applicable to any public information exchanges,

regardless of anything else.

The thing is that in open source alternative you have to be

extra attentive as to how you design your algorithms and various

data structures passed between the nodes and how do you identify

various things with confidence that they are not faked.

For example, in BEP (Block Exchange Protocol) definition there

is a concept of a local database, in essence, which is sent out

to all other nodes upon request. Now, in r/o nodes, there might

be some files that are not present in the original master node

(r/w), and so, various decisions might be made by other nodes

as to ways in which that additional information may affect the

other nodes. Because that information may look like a new version

of some existing file, or a new file that has been stamped as

being present on the master node, while it was merely faked.

In that case, some attacker, who does not even have a key to

be the master node, may effectively cause an update even of

other master nodes, just because, for example, he might stamp

some files with some flags that indicate that they originally

came from some master node, which is not true. In that

case, one master node may decide that some other master node

have added a new file, which may cause a download from the

r/o node of any garbage files created for exclusive purpose

to cause damages to the share/folder/collection.

Therefore, an extra rigid update logic needs to be implemented

in order to assure the validity of any files and data structures

floating around, and and the overall consistency of data across

all the nodes in a share.

Generally, no r/o node should be able to update any r/w nodes

under ANY circumstances whatsoever, even if some file looks

like it was obtained from some master node by faking some bits

in the data structures passed around.

Secondly, no file may cause an update of other nodes unless

there is a guarantee that it definitely originates in some

master node. That means that the file hash on the originating

master node has to be generated in such a way that faking the

originating source becomes impossible, because the originating

flag (master version) is encoded in the very hash and could be

checked after its decoding and, possibly, comparing the hashes

of the file pieces or even the entire physical file against

the hashes.

Basically, no information, bits or statuses of nearly every

single data structure passed around may be trusted unless

they are encoded with the necessary identification stamps.

The only data that can be trusted is the data that is guaranteed

to come from the master nodes, or guaranteed exact copy of it.

This all means that even if someone modifies the sources for

the program and recompiles it and then tries to use his version

as an attacking weapon, he should not be able to do any harm or

damage to the data, nor to inject, delete or modify the originals.

At the most, he might cause some extran network traffic, which is

also undesirable as this could be used as a permutation of some

bandwidth attack that might choke the nodes with utterly fake data.

Guaranteeing the master version

In order to fulfill the requirement of data consistency across all

the nodes, about the only realistic alternative is to make sure that

the only files that are propagated around, including from the r/o nodes,

are the files that are GUARANTEED to be the exact copies of the master version.

And that is easier said than done in an open source setting.

Because the attacker may modify his version of the program and set

any elements of the data structures and any flags in any way he wants.

This means that no transfers of any files should be possible unless there exists a

guarantee that either at least one master node is on line, so things

could be checked against it if needed, or there exists a copy of the

master node database, which has all the necessary information about

files, hashes and so on. That means that every r/o node first of all

obtains a master database, which may even come from some other r/o

node if there is a guarantee that it was not faked, which is possible.

The master database needs to be stored permanently on each node, so

that it survives across the program restarts.

One thing we know about the master nodes that make them different

from the r/o nodes is the KEY. No r/o node knows about

that key. Otherwise, it can become a master node any time it wants.

This provides us the hook via which we can assure that the things

really originated at the master node. So, when we hash or encrypt

things on the master node we can use the master key similar to

security certificates, private and public. The mechanisms are pretty

much the same.

We may not even have the latest version of the master database

on our node because no master nodes are currently on line. But

that is not a problem. We can still operate and transfer files

even between the r/o nodes that might even update the other r/o

nodes with new files, because those files are stamped in unforgeable

way to prove that they came from the master node.

But when at least one master node comes back on line, we can update

our r/o node databases with the latest and greatest version and

possibly update some files that are newer or fresher than those we

currently have.

With this strategy we can be sure that even if some attacker uses

a custom version of the program, modified for the purpose to attack,

it is not going to work. Because he will not be able to encrypt the

things the same way as the master nodes do, simply because it does

not have the master key.

Synthetic synchronized torrent approach

One of pretty interesting and not quite apparent approaches might be simply

using the existing open source torrent code and extending it with sync functionality.

For example, if we take some portable torrent client, written, for example,

in Java, such as Vuze/Azureus, then we already have the bulk of

the work done, including the DHT discovery, UTP protocol implementation

and so on.

From there we have several alternatives:

-

Use the existing torrent mechanisms and construct the temporary

torrents that represent a set of files to be shipped to some

other node.

Basically, to synchronize with some node via procedure of

gradual and incremental discovery of changed files, a node, trying to sync

with some other node, first of all requests the top folder

hash. If that hash is the same as yours, then you are in

sync with it. Otherwise, you request the list of hashes for

the files in the top folder, and if some of them differ

from your own, you need to request those files from that

node in case their version is newer or you are missing

some files.

Some of those files may be subfolders. In that case, you

descend deeper and request the list of files in those

subfolders in the same way.

Once you have gone through all the files on that node,

you send a request to deliver those files to you either

via single torrent or in batches, as you keep traversing

the top folder and discovering more files that need

updating. This is just a matter of efficiency.

-

Construct a single temporary torrent

for the entire collection on some node, which represents the

state of that particular node, and ship it as a whole,

regardless of whether you are in sync or not. That torrent

should contain the necessary information about the entire tree of files/folders,

such as file paths, individual hashes and file update time stamps

in the universal UTC time.

Basically, what you are doing is sending the entire state of your node

to others. They can then compare your state with theirs and, possibly,

request some files from you, whether they represent the newer version

or new files. You can do the same thing, only the other way around,

requesting their state of the node.

This might actually be not as much of an overhead, as it might look,

because, first of all, most of the nodes on some share will be in sync with

other nodes and so, there is no further activity required. But the

overhead would probably be there for the nearly synchronized nodes

because you are sending the info for the entire node while in most

cases you are out of sync with them in the amount of a few files only.

But this needs further analysis.

One of the advantages here is that you construct this torrent ONLY

when there are updates on your node.

Once constructed, you can keep it around until your node gets updated

for whatever reason. So, at least for the master side and for most

nearly synchronized nodes you save some time by constructing such

a torrent only once. But you sacrifice some time because you need

to send out more data then necessary.

It might turn out that your overall overhead is not as significant as it might look on paper.

Because shipping even a single torrent for the entire collection

is probably comparable to shipping a single file from that collection.

And, if we are talking about the audio, video, PDF or picture collections,

then a descriptor torrent is very likely to be comparable to the

average file size, if not significantly smaller than that.

But the benefit is that you only do a single transfer which

contains the complete state of your node. So, it looks like a

statistical issue and it will highly depend on the collection type.

-

When some files are changed/updated/added/deleted on some node,

you send the "updated" information notification to all other nodes.

After that, they send you back the requests to deliver those files

to them. It could be a number of files simultaneously, if, for

example, you have added the entire subdirectory to the share.

Basically, there are variations on this theme and things need

further consideration and a more detailed analysis. But what

we achieve with this approach is quite a bit. First of all,

we would already start with a fully functioning torrent program,

and, if it is portable across several platforms, we can immediately

start working on implementing the sync functionality in small

incremental steps instead of starting the whole thing from scratch.

Single modifiable torrent approach

Let us consider the single modifiable torrent approach to syncing.

The goals of this approach is to minimize the amount of development

effort to get the initial version going, while, at the same time,

assuring that all the nodes are updated to the "latest and greatest"

version.

Furthermore, it has to provide the guarantee of data consistency across

all the nodes, meaning that all the nodes contain the correct and

"latest" version of all the files. (Note: latest is in quotes because

the very notion of file time stamp is quite illusory, at least in

cases of a deliberate "time stamp" attack on some share.)

Also, it would be interesting to investigate the possibility of

uniting the ordinary torrent functionality while extending the torrent

client program to also do the sync operations. There is quite a bit

of common information GUI-wise between the regular torrent operations

and syncing, which makes is worth of investigating it further.

It might turn out that the easiest way to implement such an architecture would

be to stay as close to the original torrent transfer concept as possible,

and that is - to use the torrents for the entire collection rather than

doing it in a more fine grained manner by constantly constructing smaller

torrents for only a part of a collection. But there might be potential

performance degradation in case of using the single torrent for the

entire collection versus a number of smaller torrents that only contain

the modified or new files.

But single torrent approach might exhibit the additional difficulties of

synchronizing the updates in case there is pending transfer of some torrent

while some file was updated in the middle of transfer operation. But

this is one of those more "esoteric" aspects which, by the way, would

have to be dealt with even if we handle transfers by construction a number

of smaller torrents representing the deltas only.

This approach assumes that all the top level communication exchanges

begin with top folder hash. Top folder hash is the combined hash

of all the files in a share/top folder/collection. Any further

action is required if those hashes differ on different nodes.

Else, the sync state has been achieved and there is nothing to do

beyond periodic scanning to recalculate all the hashes and, ultimately,

the top folder hash, which is the main or "key" hash for the share.

About hashing.

The idea is that you gradually and incrementally reach the state

where all the nodes on a share contain the same collection,

represented by the same torrent file. That is the stable and final

state of the share and it implies that all the nodes are in sync.

But there might be a difficulty here because there is a contradiction.

On one hand, we are trying in effect to create a single torrent

that represents the master collection. That would imply that all

the nodes would eventually end up with the same torrent file when

they are all fully synchronized.

But, since any node may ignore some files from the master collection, that would mean

that their version of the torrent might be, and is likely to be,

different than on a master node. But there might be a solution

to that, which allows for a SUBSET of the master node files to be

distributed. Because it still valid, correct and current with the

exception that it is not complete.

But it is just one node's view. When you contact the other nodes, you are likely to see a slightly

different version of the same collection, except THEIR ignores are

likely to be different. But it does not matter. What matters is that

they might have some files that are missing in the first node, and

that is what matters.

Because you enlarge the view on the complete

collection by using the union operation on all the data on all the

nodes on line. The more nodes, the more chance that your own version

will be as close to the master version as possible.

But the files themselves are exactly the same as on

the master node(s). This needs further analysis. One of the solutions

might be to have the ability to cancel the pending transfers once

we detect the change in the file system and then restart some

transfers once new torrent file is constructed.

-

Again, the 1st step in this procedure is to request a top folder

hash (or a magnet link) from some node and if that hash is the same

as yours, there is nothing to be done with this node.

-

If top folder hash is different than yours, then you request the

torrent for the entire collection from that node.

-

Any master (r/w) node, at any given time may produce changes to the collection

by modifying, adding, deleting, restoring or renaming files. So,

in one way or another, that node will look "newer" than others.

(According to data integrity across the nodes rule, the r/o nodes

may not perform any modifications on any files. Even if they do so,

their files will be re-downloaded from the master version).

But it does not mean that ALL the information it has needs to

be distributed to other nodes. For example, some r/o node may

decide to modify some file, but that file is not necessarily

to be delivered to other nodes because that file has different

hash than the same file on the master node, which means that

his modification is in fact invalid and is to be overwritten

with a version of the master node or the reference node, the

original supplier of the information.

But if some file is on the ignore list, then it can be modified,

deleted and even restored, none of which will propagate to

other nodes, nor will it be updated from the other nodes

(this is the BTSync version of logic).

Because it will be considered a private and "untouchable" version

on this particular node.

Ignore means this file does not exist for any purposes whatsoever,

as far as this share and the sync process is concerned.

Actually, this case may get complicated by the fact that there may be several reference

nodes for the same file. Meaning that even though there was

some originating node for this information, later on, it

decided to pass the reference (or master) status (for this particular file) to other nodes,

so that they were allowed to update the same file(s). For example,

a publisher may have an original writer. But once the article

is written, it then goes to a number of editors, for style,

syntax, the volume and so on. Which means that all of these

people are now the reference nodes and the latest version of

the file prevails and is distributed to other reference/master

nodes.

-

Once you request or are informed about the top hash modification,

your node than requests the torrent file from the node that

notified it about modification. At that point, you analyze this

torrent according to the rules and decide if you want to update

some of your files according to that torrent. In case you do,

you request those files, and once done downloading them, update

your own torrent to correspond to the state of your node.

Note: there is one uncomfortable element as far as torrent

files go. The standard torrent file does not have the field

with file update date/time. In this respect the syncing

process in this particular design differs from the purely

torrent approach in that in torrent clients, there is no need to know the file update

time. As long as they differ, the file needs to be updated.

All you need is the hashes of the individual pieces. But the

update time is irrelevant.

But there is a solution to the time stamp issue in a torrent

making it "non-standard". You can still use the standard torrents

that do not contain the time stamps, but only contain the list

of files and list of hashes. If you find that some file in a

torrent has a different hash, then you request the supplier

to provide you the time stamp in a separate network exchange,

if you decide to honor the very idea of a time stamp as something

significant and reliable in your opinion. So now, since most of

the nodes are usually in the state of full sync and any updates

usually happen on individual files, this additional overhead

of requesting the time stamp via separate network exchange become

a matter of statistics and may not impact the overall performance

in any measurable degree. Furthermore, this can be done not just

for a single file that differs, but for any number of files in

one go, and then sending the request to get their mod-times in a

single network exchange.

But... Basically, the very idea of time stamping the file modifications

is not a trustworthy mechanism, and for several reasons. For example, in

BTSync we constantly observe the nodes that completely refuse to

sync if their clock differs by more than 6 minutes from the other

nodes. What we have seen is up to 30% of all the nodes might show up

as being out of range in terms of acceptable time difference.

Interestingly enough, most of those nodes have a 1 hour difference,

which looks like the time zone issue. Furthermore, we have seen

the nodes that have their clocks off by DAYS! So, how can you trust

the file modification time in those situations? What if their time

is set in the future and everyone starts syncing with them?

Mind you, this is not even some attack from them, but something

they are simply not aware of or pay any attention to for whatever reason.

But in terms of syncing, the mere fact that your file hashes are different is still insufficient

in terms of figuring out which version of the file is either more current or more

"authentic", or more "correct". Basically, if the hashes differ, about the only way to know

which version is "legal" is to compare those hashes to the version

on the master or reference nodes or the guaranteed copies on

other r/o nodes, as long as there exists a guarantee that they

represent the exact copy of the file.

Again, the idea here is that no "newer" version of the file is

considered to be valid or acceptable unless it was modified and

supplied by the master nodes,

regardless of the "newness" of a time stamp, which is guaranteed

by default in a "hardcore logic", which implies that the r/o nodes

may propagate only the master node versions of the files. All

modifications by the r/o nodes are considered to be the local

or private copies and are in fact subject to being overwritten

by the master node versions unless included in the SyncIgnore files.

Because in case of an attack on a share via "time stamp attack" the file(s) may look "newer",

but in fact they are not even the same files, but nothing more

than fake data. They have been modified by the attacker to

sabotage the share.

But, "any way you cut it", merely carrying the file update time stamp with

torrents does not really resolve the time stamp attack situation,

because time stamps can not be trusted, especially in public applications.

This means that about the only way to detect such an attack is to refer to the hash

of the master version of the file.

-

At any given point, any torrents "out there" that are different

from the master node can not be classified as "authoritative".

The ULTIMATE reference is the reference node. After that, goes

the master node, which contains the exact copies of every single

file in the collection. So, this is the protective mechanism

to assure the integrity and consistency of data across the nodes.

It is possible to design a mechanism where files on r/o nodes

can be trusted even if there is no master nodes on line. For

example, we can add a flag "master version" which will be set

in case this particular r/o node can verify at any given point

that this particular version of the file is indeed an exact copy

of the master version. There are several ways to design such

a mechanism.

Actually, with "hard core" data consistency logic, every file

in the database of the r/o node is AUTOMATICALLY guaranteed

to be the master/reference node version. Because it won't be

propagated to other r/o nodes in any other version since its key

is different from the master node version and any modifications

of the file by the r/o node in the file system, won't change

the status or its file piece hashes and the file hash.

So, the only files that are propagated are guaranteed master

node versions.

-

So, by shuffling these per node torrents around, you are informing

the other nodes of a total and final state of the share, which,

ultimately, is determined by the reference or master nodes.

-

No r/o node may update any other r/o nodes with any files that

do not have the same hash as on the master (r/w) nodes. And

under NO circumstances whatsoever the r/o node may update the

master nodes or the reference node.

-

So, eventually, the collection state will stabilize and all

the nodes will update all those files that they wish to update

and to be updatable on their nodes. But even if some node notifies

the other node(s) of updated files, it does not imply that those

nodes are obliged to download those files to update their

share/collection (take, for example, the case where the r/o node

has this particular file in its ignore list).

Except logically, no r/o node may modify any files and expect

them to be propagatable to other nodes.

-

The main difference between this approach and pure torrent approach

is that you do not exchange the torrent files themselves between

the users in order for them to be able to join the share/collection. Because

they are dynamic and change all the time. In fact, those torrents

are temporary and are invisible to the user. They are used by the

internal program mechanisms and are not meant to be advertised as

an identity or a key for the share. But you can exchange

the master node versions of torrents. This would mean that every

node may also have a copy of the master torrent in addition to

their own local version. (This needs further analysis.)

But you identify this collection/share by the share key

(or a magnet link), which is the same for everyone regardless

of the state and condition of the data on any nodes.

The share key identifies the collection, and any node, even an empty

one, may join it and immediately start downloading the files from

all the nodes on line, just like with torrents. Except in our case

we discover "the latest and greatest" version of a modifiable torrent

via this key, then node discovery, then requesting the latest torrent

guaranteed to be the exact copy of the master (r/w) or reference node(s).

But the underlying mechanisms are exactly the torrent based.

-

It has to be noted that any files added by the r/o node do not

automatically get included in the collection. The same thing with

deleted or renamed files. The deleted files still remain in the

collection database and will not be deleted on the other r/o nodes.

In fact, they will be re-downloaded from the nodes that have the

master copy of them. The same with renamed files. The files with

old names will still remain in the collection, and will be handled

in exactly the same way as deleted files, and that is, restored.

But their new copies will NOT become a part of the collection.

Simply because they do not exist on the master (r/w) nodes.

The same thing is with modified files. ANY modification of any

files on the r/o nodes will be overwritten with the master version.

All of these are the "hard core" data consistency rules that can not be

violated under ANY circumstances. Because they guarantee the consistency

(the same exact copy) of the files across ALL the nodes. Else, the eventual

data degradation is virtually guaranteed, no matter what other rules

you might implement.

The perfect example of it would be the sync

inconsistencies and update issues in BTSync.

In particular, their design principle "the user knows better" is

a logical absurd as far as data consistency goes and virtually guarantees the

eventual data inconsistency across the nodes, and even worse than

that, some files may stop syncing completely and you won't be able

to restore their normal sync state no matter what you do. You'd have

to delete that share from BTSync and re-add it again. But then you

loose all your local file modifications and deletions, in case you

have deleted or modified some files before you readded the share

and expect them to remain deleted. ALL of your files will be

restored to the master node's version.

BTSync behavior table

This is just a rough sketch which will require much more detailed

analysis of all possible cases. But this is something that might

turn out to be the easiest and most effective and simplest way

to go about it by starting with some open source torrent client

code base.

Single modifiable torrent approach via magnet link

One interesting aspect of using a single torrent file for the entire

collection is that if that torrent file is in standard .torrent format,

then it can be either made directly available to download by anyone,

or even better, you might refer to it via magnet link, which is

effectively the same thing as a key to the collection, except it does

not have the torrent file itself. But the magnet link itself provides

further information for you to recover the torrent file itself. The

link is the same, but the content of what it points to is different.

That makes it like GUID of some sort. The link itself is unique.

That means that you simply provide the magnet link and it is eventually

resolved into a torrent file. In the most general case, the magnet link

contains only the hash or a key for the collection. The peer discovery

happens via DHT protocol. But it has the provisions to provide the

addresses of several trackers, just like torrents. If no trackers are

provided in the magnet link, the only way to discover the peers would

be via DHT or via exact or the fall-back exact address of a torrent file.

You can specify those in the magnet link text.

Basically, the magnet link differs from the direct torrent approach

in that it represents the mechanism of mapping a unique torrent

content to the universal and ever valid identifier to the collection.

There is really no need to carry around the static torrent files

that inevitably get outdated. All you need is the universal one-to-many

mapping mechanism, which is what the magnet links attempt to provide.

The actual torrent file corresponding to the magnet link is obtained

directly by the peers. This means that in our approach we do not

need to pass around the torrent files themselves, and, secondly, as

soon as we discover at least one other peer, we will obtain "the

latest and greatest" torrent file from them directly. Now, once we

discover at least one peer, from then on, regardless of how "old"

or outdated our own torrent file is, regardless of how we obtain it,

we, from then on, are guaranteed to eventually obtain "the latest

and greatest" version of the torrent and collection.

This approach could be quite interesting to consider.

Yes, it would work fine if collections do not change. Otherwise,

you have to make sure that torrent clients always refer to this share

via magnet link, and not via torrent, every time they join this.

So, this means that by simply modifying the magnet link text

you effectively refer to a different torrent file. This needs

more analysis though.

Sync general design issues

Several things may be noted here.

The basic idea used by BTSync is to use torrents as the underlying

mechanism and an engine to do the transfers and updates.

This is a pretty good idea, and for several reasons.

First of all, there is no need to "reinvent the wheel".

The entire transfer mechanism is well developed by now and

is available in open source versions.

The basic idea is simple enough. You simply use the same torrent

transfer engine except you create the dynamic and temporary

torrents on the fly instead of creating a single torrent of

fixed contents for the entire share or collection of information.

The MAIN disadvantage of static, per collection, torrents is that

it can not be updated, extended, modified and so on. Yes, its main

advantage is that it guarantees you the integrity of a collection

and gives you an assurance of reliability of data and guarantees

that no data within the collection may be modified. But that is

not what is needed in the modern information age.

The nature of information in general is dynamic. It changes all the

time. And that is why torrents get outdated. But the problem with

them is that they can not be changed once created. You'd have to

create new and new torrents for new versions of information, which

is highly undesirable. It would be preferable to keep a single

version that could be updated, extende and so on.

Now, since torrent transfer engines and mechanisms are a mature

technology by now, it does make sense to use them instead of

inventing the whole engine again. And that implies that at least

30 to 70% of the work is done once you can use the same technology

and create the dynamic and temporary torrents to perform the transfers.

What remains at that point is the high level control logic and

GUI. At that point, you have about 90% of all the work done.

The remaining mechanisms are key mapping to share/collection,

node discovery, such as using the DHT or "predefined hosts",

used as a general purpose trackers, and various hashing algorithms

and traffic encryption. And that is all there is to it, pretty much.

None of it is that "esoteric" once you start looking at it with

a competent enough eye.